This is Naked Capitalism fundraising week. 826 donors have already invested in our efforts to combat corruption and predatory conduct, particularly in the financial realm. Please join us and participate via our donation page, which shows how to give via check, credit card, debit card, PayPal, Clover, or Wise. Read about why we’re doing this fundraiser, what we’ve accomplished in the last year, and our current goal, karōshi prevention.

By Lambert Strether of Corrente.

As readers have understood for some time, AI = BS. (By “AI” I mean “Generative AI,” as in ChatGPT and similar projects based on Large Language Models (LLMs)). What readers may not know is that besides being bullshit on the output side — the hallucinations, the delvish — AI is also bullshit on the input side, in the “prompts” “engineered” to cause the AI generate that output. And yet, we allow — we encourage — AI to use enormous and increasing amounts of scarce electric power (not to mention water). It’s almost as if AI is waste product all the way through!

In this very brief post, I will first demonstrate AI’s enormous power (and water) consumption. Then I will define “prompt engineering,” looking at OpenAI’s technical documentation in some detail. I will then show the similarities between prompt “engineering,” so-called, and the ritual incantations of ancient magicians (though I suppose alchemists would have done as well). I do not mean “ritual incantations” as a metaphor (like Great Runes) but as a fair description of the actual process used. I will conclude by questioning the value of allowing Silicon Valley to make any society-wide capital investment decisions at all. Now let’s turn to AI power consumption.

AI Power Consumption

From the Wall Street Journal, “Artificial Intelligence’s ‘Insatiable’ Energy Needs Not Sustainable, Arm CEO Says” (ARM being a chip design company):

AI models such as OpenAI’s ChatGPT “are just insatiable in terms of their thirst” for electricity, Haas said in an interview. “The more information they gather, the smarter [sic] they are, but the more information they gather to get smarter, the more power it takes.” Without greater efficiency, “by the end of the decade, AI data centers could consume as much as 20% to 25% of U.S. power requirements. Today that’s probably 4% or less,” he said. “That’s hardly very sustainable, to be honest with you.”

From Forbes, “AI Power Consumption: Rapidly Becoming Mission-Critical“:

Big Tech is spending tens of billions quarterly on AI accelerators, which has led to an exponential increase in power consumption. Over the past few months, multiple forecasts and data points reveal soaring data center electricity demand, and surging power consumption. The rise of generative AI and surging GPU shipments is causing data centers to scale from tens of thousands to 100,000-plus accelerators, shifting the emphasis to power as a mission-critical problem to solve… The [International Energy Agency (IEA)] is projecting global electricity demand from AI, data centers and crypto to rise to 800 TWh in 2026 in its base case scenario, a nearly 75% increase from 460 TWh in 2022.

From the World Economic Forum,

AI requires significant computing power, and generative AI systems might already use around 33 times more energy to complete a task than task-specific software would.

As these systems gain traction and further develop, training and running the models will drive an exponential increase in the number of data centres needed globally – and associated energy use. This will put increasing pressure on already strained electrical grids.

Training generative AI, in particular, is extremely energy intensive and consumes much more electricity than traditional data-centre activities. As one AI researcher said, ‘When you deploy AI models, you have to have them always on. ChatGPT is never off.’ Overall, the computational power needed for sustaining AI’s growth is doubling roughly every 100 days.

And from the Soufan Center, “The Energy Politics of Artificial Intelligence as Great Power Competition Intensifies“:

Generative AI has emerged as one of the most energy-intensive technologies on the planet, drastically driving up the electricity consumption of data centers and chips…. The U.S. electrical grid is extremely antiquated, with much of the infrastructure built in the 1960s and 1970s. Despite parts of the system being upgraded, the overall aging infrastructure is struggling to meet our electricity demands–AI puts even more pressure on this demand. Thus, the need for a modernized grid powered by efficient and clean energy is more urgent than ever…. [T]he ability to power these systems is now a matter of national security.

Translating, electric power is going to be increasingly scarce, even when (if) we start to modernize the grid. When push comes to shove, where do you think the power will go? To your Grandma’s air conditioner in Phoenix, where she’s sweltering at 116°F, or to OpenAI’s data centers and training sets? Especially when “national security” is involved?

AI Prompt “Engineering” Defined and Exemplified

Wikipedia (sorry) defines prompt “engineering” as follows:

Prompt engineering is the process of structuring an instruction that can be interpreted and understood [sic] by a generative AI model. : a prompt for a text-to-text language model can be a query such as “what is Fermat’s little theorem?”, a command such as “write a poem about leaves falling”, or a longer statement including context, instructions, and conversation history.

(“[U]nderstood,” of course, implies that the AI can think, which it cannot.) Much depends on the how the prompt is written. OpenAI has “shared” technical documentation on this topic: “Prompt engineering.” Here is the opening paragraph:

As you can see, I have helpfully underlined the weasel words: “Better,” “sometimes,” and “we encourage experimentation” doesn’t give me any confidence that there’s any actual engineering going on at all. (If we were devising an engineering manual for building, well, an electric power generating plant, do you think that “we encourage experimentation” would appear in it? Then why would it here?)

Having not defined its central topic, OpenAI then goes on to recommend “Six strategies for getting better results” (whatever “better” might mean). Here’s one:

So, “fewer fabrications” is an acceptable outcome? For whom, exactly? Surgeons? Trial lawyers? Bomb squads? Another:

“Tend” how often? We don’t really know, do we? Another:

Correct answers not “reliably” but “more reliably”? (Who do these people think they are? Boeing? “Doors not falling off more reliably” is supposed to be exemplary?) And another:

“Representive.” “Comprehensive.” I guess that means keep stoking the model ’til you get the result the boss wants (or the client). And finally:

The mind reels.

The bottom line here is that the prompt engineer doesn’t know how the prompt works, why any given prompt yields the result that it does, doesn’t even know that AI works. In fact, the same prompt doesn’t even give the same results each time! Stephen Wolfram explains:

[W]hen ChatGPT does something like write an essay what it’s essentially doing is just asking over and over again “given the text so far, what should the next word be?”—and each time adding a word.

Like glorified autocorrect, and we all know how good autocorrect is. More:

But, OK, at each step it gets a list of words with probabilities. But which one should it actually pick to add to the essay (or whatever) that it’s writing? One might think it should be the “highest-ranked” word (i.e. the one to which the highest “probability” was assigned). But this is where a bit of voodoo begins to creep in. Because for some reason—that maybe one day we’ll have a scientific-style understanding of—if we always pick the highest-ranked word, we’ll typically get a very “flat” essay, that never seems to “show any creativity” (and even sometimes repeats word for word). But if sometimes (at random) we pick lower-ranked words, we get a “more interesting” essay.

. And, in keeping with the idea of voodoo, there’s a particular so-called “temperature” parameter that determines how often lower-ranked words will be used, and for essay generation, it turns out that a “temperature” of 0.8 seems best. (It’s worth emphasizing that [whatever that means] [whose?].

This really is bullshit. These people are like an ant pushing a crumb around until it randomly falls in the nest. The Hacker’s Dictionary has a term that covers what Wolfram is exuding excitement about, which covers prompt “engineering”:

voodoo programming: n.

[from George Bush Sr.’s “voodoo economics”]

1. The use by guess or cookbook of an obscureor hairy system, feature, or algorithm that one does not truly understand. The implication is that the technique may not work, and if it doesn’t, one will never know why. Almost synonymous with black magic, except that black magic typically isn’t documented and nobody understands it. Compare magic, deep magic, heavy wizardry, rain dance, cargo cult programming, wave a dead chicken, SCSI voodoo.

2. Things programmers do that they know shouldn’t work but they try anyway, and which sometimes actually work, such as recompiling everything.

I rest my case.

AI “Prompt” Engineering as Ritual Incantation

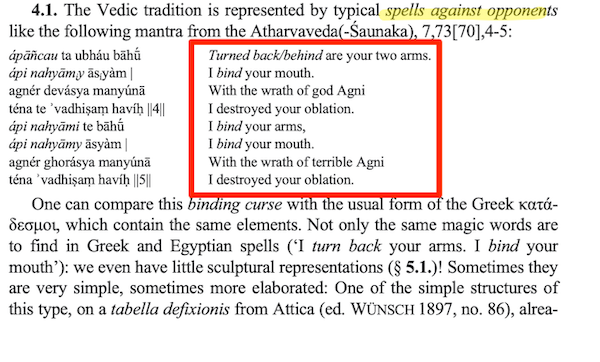

From Velizar Sadovski (PDF), “Ritual Spells and Practical Magic for Benediction and Malediction: From India to Greece, Rome, and Beyond (Speech and Performance in Veda and Avesta, I.)”, here is an example of an “Old Indian” Vedic ritual incantation (c. 900 BCE):

The text boxed in red is a prompt — natural language text describing the task — albeit addressed to a being even less scrutable than a Large Language Model. The expected outcome is confusion to an enemy. Like OpenAI’s ritual incantations, we don’t know why the prompt works, how it works, or even that it works. And as Wolfram explains, the outcome may be different each time. Hilariously, one can imagine the Vedic “engineer” tweaking their prompt: “two arms” gives better results than just “arms,” binding the arms first, then the mouth works better; repeating the bindings twice works even better, and so forth. And of course you’ve got to ask the right divine being (Agni, in this case), so there’s a lot of professional skill involved. No doubt the Vedic engineer feels free to come up with “creative ideas”!

Conclusion

The AI bubble — pace Goldman — seems far from being popped. AI’s ritual incantations are currently being chanted in medical data, local news, eligibility determination, shipping, and spookdom, not to mention the Pentagon (those Beltway bandits know a good think when they see it). But the AI juice has to be worth the squeeze. Cory Doctorow explains the economics:

Eventually, the industry will have to uncover some mix of applications that will cover its operating costs, if only to keep the lights on in the face of investor disillusionment (this isn’t optional – investor disillusionment is an inevitable part of every bubble).

Now, there are lots of low-stakes applications for AI that can run just fine on the current AI technology, despite its many – and seemingly inescapable – errors (“hallucinations”). People who use AI to generate illustrations of their D&D characters engaged in epic adventures from their previous gaming session don’t care about the odd extra finger. If the chatbot powering a tourist’s automatic text-to-translation-to-speech phone tool gets a few words wrong, it’s still much better than the alternative of speaking slowly and loudly in your own language while making emphatic hand-gestures.

There are lots of these applications, and many of the people who benefit from them would doubtless pay something for them. The problem – from an AI company’s perspective – is that these aren’t just low-stakes, they’re also low-value. Their users would pay something for them, but not very much.

For AI to keep its servers on through the coming trough of disillusionment, it will have to locate high-value applications, too. Economically speaking, the function of low-value applications is to soak up excess capacity and produce value at the margins after the high-value applications pay the bills. Low-value applications are a side-dish, like the coach seats on an airplane whose total operating expenses are paid by the business class passengers up front. Without the principal income from high-value applications, the servers shut down, and the low-value applications disappear:

Now, there are lots of high-value applications the AI industry has identified for its products. Broadly speaking, these high-value applications share the same problem: they are all high-stakes, which means they are very sensitive to errors. Mistakes made by apps that produce code, drive cars, or identify cancerous masses on chest X-rays are extremely consequential.

But why would anybody build a “high stakes” product on a technology that’s driven by ritual incantations? Airbus, for example, doesn’t include “Lucky Rabbit’s Foot” as a line item for a “fully loaded” A350, do they?

There’s so much stupid money sloshing about that we don’t know what do with it. Couldn’t we give consideration to the idea of putting capital allocation under some sort of democratic control? Because the tech bros and VCs seem to be doing a really bad job. Maybe we could even do better than powwering your Grandma’s air conditioner.