Before our current age of nuclear war anxiety, there was widespread concern about an earlier terrifying weapon of mass destruction: poison gas. I will describe the history of war gases in the context of our current concerns about atomic war. It is an ugly topic, but examining it may provide some hope for a way out of our current predicament.

How It Started

The origins of chemical weaponry trace back to antiquity, when armies first used noxious substances and smoke to gain an advantage in battle. In sieges, defenders and attackers alike employed burning sulfur, pitch, or quicklime to create choking or blinding clouds. The Byzantines’ famed Greek fire, developed in the 7th century, also had elements of psychological terror and chemical combustion, though it was more incendiary than toxic. These early efforts, however, were rudimentary and lacked scientific control.

It was not until the scientific advances of the late 18th and 19th centuries that the foundation for modern chemical warfare was established. Pioneering chemists such as Carl Wilhelm Scheele, Humphry Davy, and others isolated and described a variety of highly toxic gases, including chlorine, phosgene, and hydrogen cyanide. These substances were known for their suffocating and lethal properties, and some military thinkers began to consider their potential use. During the Crimean War in 1854, British scientist Lyon Playfair even proposed deploying shells filled with cacodyl cyanide against Russian forces, though the idea was rejected as contrary to the norms of civilized warfare.

The first systematic and large-scale use of chemical weapons came during the First World War. As the Western Front descended into stalemate, Germany turned to its growing chemical industry and the expertise of chemist Fritz Haber to develop a new weapon. On April 22, 1915, during the Second Battle of Ypres, German forces released over 150 tons of chlorine gas from cylinders, creating a greenish-yellow cloud that drifted across no-man’s-land into Allied trenches. The attack killed and wounded thousands and shocked the world. In the years that followed, both sides deployed an escalating array of agents, including phosgene and diphosgene, which were more potent choking agents, and finally mustard gas, introduced in 1917, which caused severe chemical burns and long-lasting contamination of the battlefield. By the end of the war, chemical weapons had inflicted more than a million casualties and left a lasting scar on the collective memory of the conflict.

On October 15, 1918, just weeks before the Armistice, Adolph Hitler was serving as a corporal in Bavarian Reserve Infantry Regiment 16. On that day, his unit near Ypres in Belgium came under a British mustard gas attack. Hitler reportedly suffered temporary blindness and lung irritation from exposure to the gas cloud. He was evacuated to a military hospital, where he was still recovering when Germany signed the Armistice on November 11, 1918. Hitler later described his hospitalization and the news of Germany’s surrender as one of the most traumatic experiences of his life, shaping his sense of betrayal and anger that would feed into his postwar political ideology.

Chemical Deterrence

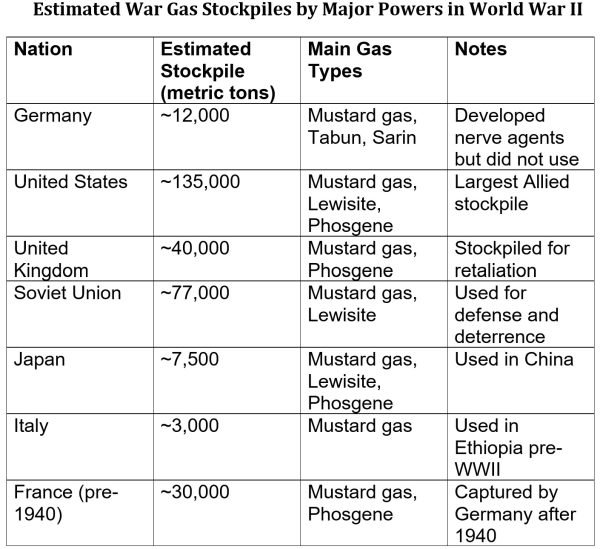

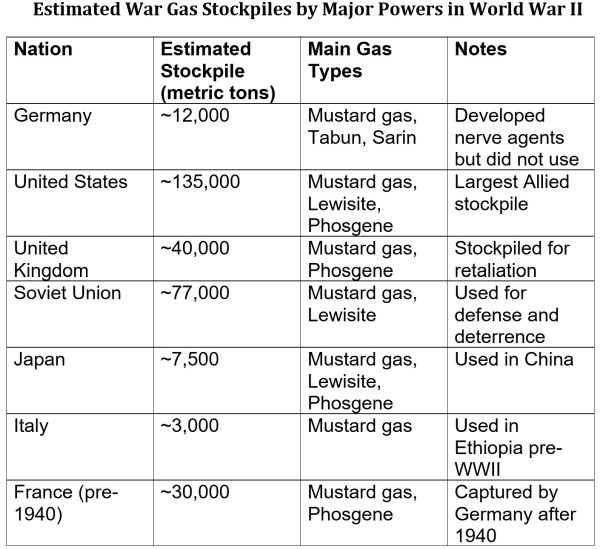

The interwar years saw widespread condemnation of chemical warfare and the signing of the 1925 Geneva Protocol, which banned the use of chemical and biological weapons. Yet research continued in secret, with nations stockpiling mustard gas, phosgene, and newly discovered nerve agents such as Tabun and Sarin, developed in Nazi Germany in the 1930s.

Although WWII was vastly more destructive than WWI, war gases were not used in the European or Pacific Theaters. The reason was fear of retaliation. The allied and axis powers had amassed substantial stocks of chemical weapons that acted as deterrents. The exception to this standoff was Japanese use of war gases against Chinese military and civilian personnel from 1937 to 1945. China had no gas weapons with which to retaliate.

The history of chemical weapons reflects a tension between scientific progress, military innovation, and ethical restraint. What began as crude battlefield smoke evolved, through industrial and scientific advances, into some of the most feared weapons of modern war—spurring both horror and international efforts to control their use. Public revulsion and international diplomatic efforts eventually resulted in comprehensive treaties banning chemical weapons.

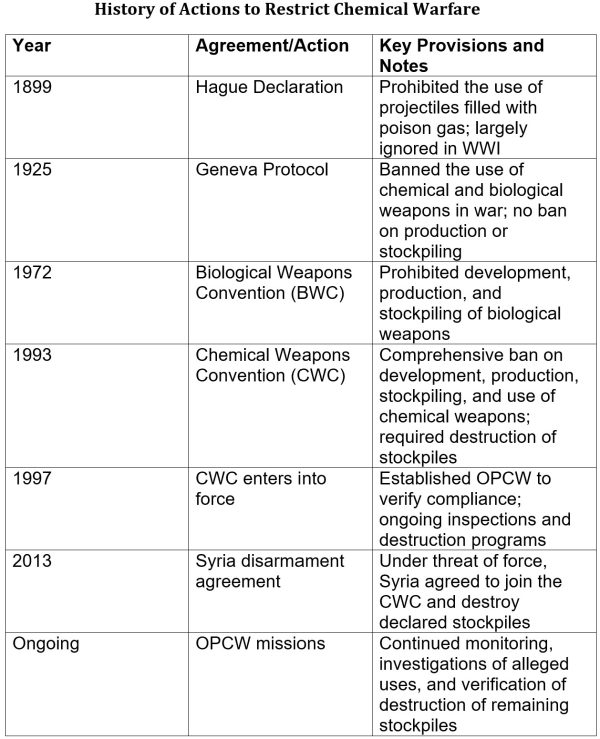

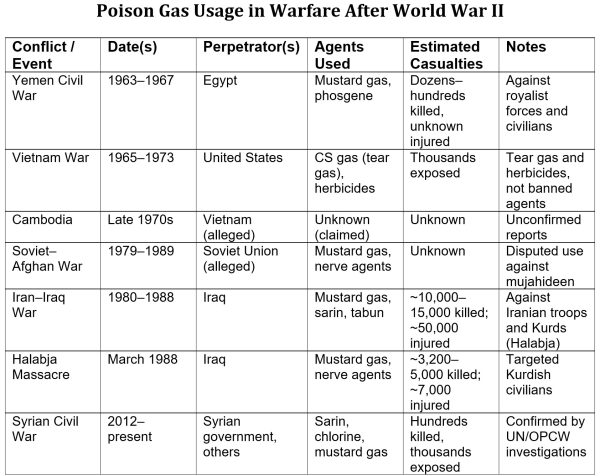

Final Agonies

Poison gas use in warfare did not end completely after WWII, but major powers gradually abandoned such weapons because of deterrence, treaty restrictions, and operational limitations. Chemical weaponry was used sporadically in regional wars, but this was seldom acknowledged by the belligerents. The most extensive post-WWII use of poison gas was by Iraq in its war with Iran, but in no case was this weapon strategically decisive. The technology of weaponry increasingly favored precision targeting over area-effect munitions, and this further diminished the utility of an internationally banned weapon.

From Poison Gas to Radioactive Fallout

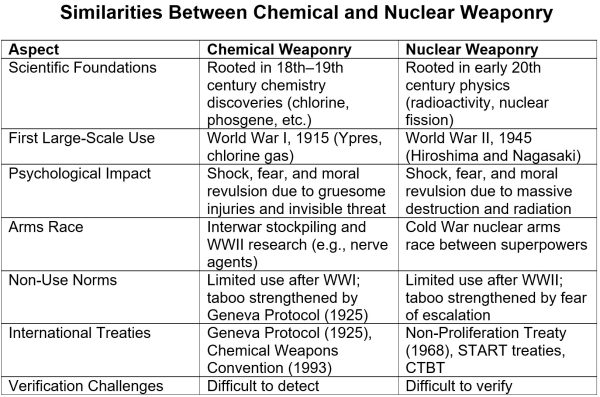

The evolution of nuclear weapons has followed a course parallel to that of chemical weapons. In the years following the Hiroshima and Nagasaki bombings, nations have accumulated large arsenals of weapons that are so frightfully devastating that they have yet to be used. This balance of terror has been accompanied by diplomatic efforts to lessen the danger by limiting the size and composition of nuclear arsenals through arms control treaties.

The historical parallels extend to the lives of Fritz Haber and J. Robert Oppenheimer. Both were distinguished scientists, prominent in their fields, who turned their talents toward designing weaponry for patriotic reasons.

Haber, who was raised in a Jewish family, believed that his work in leading development of war gases in WWI would spare him from persecution under the Hitler regime, but he was effectively forced out of his prestigious position at the Kaiser Wilhelm Institute and left Germany in 1933. Among his scientific achievements was receiving the Nobel Prize in Chemistry in 1919 for the Haber process enabling the efficient generation of ammonia for fertilizer, which greatly expanded global food supplies. The dark legacy of this work on toxic gases was the research that would later result in the development by others of the Zyklon B gas used in the German extermination camps. Ironically, Haber died in 1934 while on his way to assume the directorship of what would become the Weizmann Institute in Palestine.

Oppenheimer left a distinguished career in physics to lead the Manhattan project, which developed the first atomic bomb. He was motivated by the fear that Nazi Germany would develop a nuclear weapon. When Germany was defeated, this threat no longer existed, but he was unwilling to oppose the use of the bomb against Japan. He subsequently felt moral unease and worked to prevent further use of nuclear weapons by advocating international agreements to prevent an arms race. He hoped to prevent further proliferation and to make nuclear weapons a political deterrent, not a military tool. His resistance to development of the even more devastating hydrogen bomb led to the government stripping him of his security clearance 1954. He was cast out of the inner circle of U.S. nuclear policy, publicly shamed, and made a symbol of the dangers of dissent during the Cold War.

Conclusion

If the future of nuclear weapons continues to parallel that of chemical weapons, we may hope that the current balance of terror will be ended by arms control agreements culminating in dismantling of nuclear arsenals. Although neither chemical nor nuclear weapons can be un-invented, their availability and the willingness to use them can be effectively restricted. Moreover, the steady advance in the potency of non-nuclear weapons promises to meet the defensive military security requirements of the world’s nations. The final irony may be that the quest for an ultimate weapon becomes self-extinguishing.